AI in Curriculum Design: 8 Game-Changing Ideas in 2026

TL;DR

- What it enables: AI transforms curriculum development by creating personalized, comprehensive, and effective educational experiences for students.

- What it includes: Knowledge base training, data-driven content creation, visual generation, assessment automation, and personalized learning paths.

- Key elements: Natural language processing, predictive analytics, real-time adaptation, and collaborative human-AI educational design approaches.

- Top 8 for 2026 include: Knowledge base training, tailored content drafting, streamlined course creation, and predictive performance analytics.

- Why it matters: AI enhances educator efficiency while providing students with adaptive, engaging learning experiences tailored to individual needs.

The Role of AI in Curriculum Development

When we think of artificial intelligence (AI), we often relate it to fields like computer science or data analysis. But have you ever considered its role in curriculum development?

The role of AI in curriculum development is to improve our educational process and make it more personalized, comprehensive, and effective. From making course content more relevant to enhancing human educators’ efficiency, AI is transforming the landscape of curriculum design and playing an invaluable role in curriculum generation.

It’s like having a curriculum designer who never sleeps, constantly learning and adapting, and always aiming for the best possible student learning outcomes.

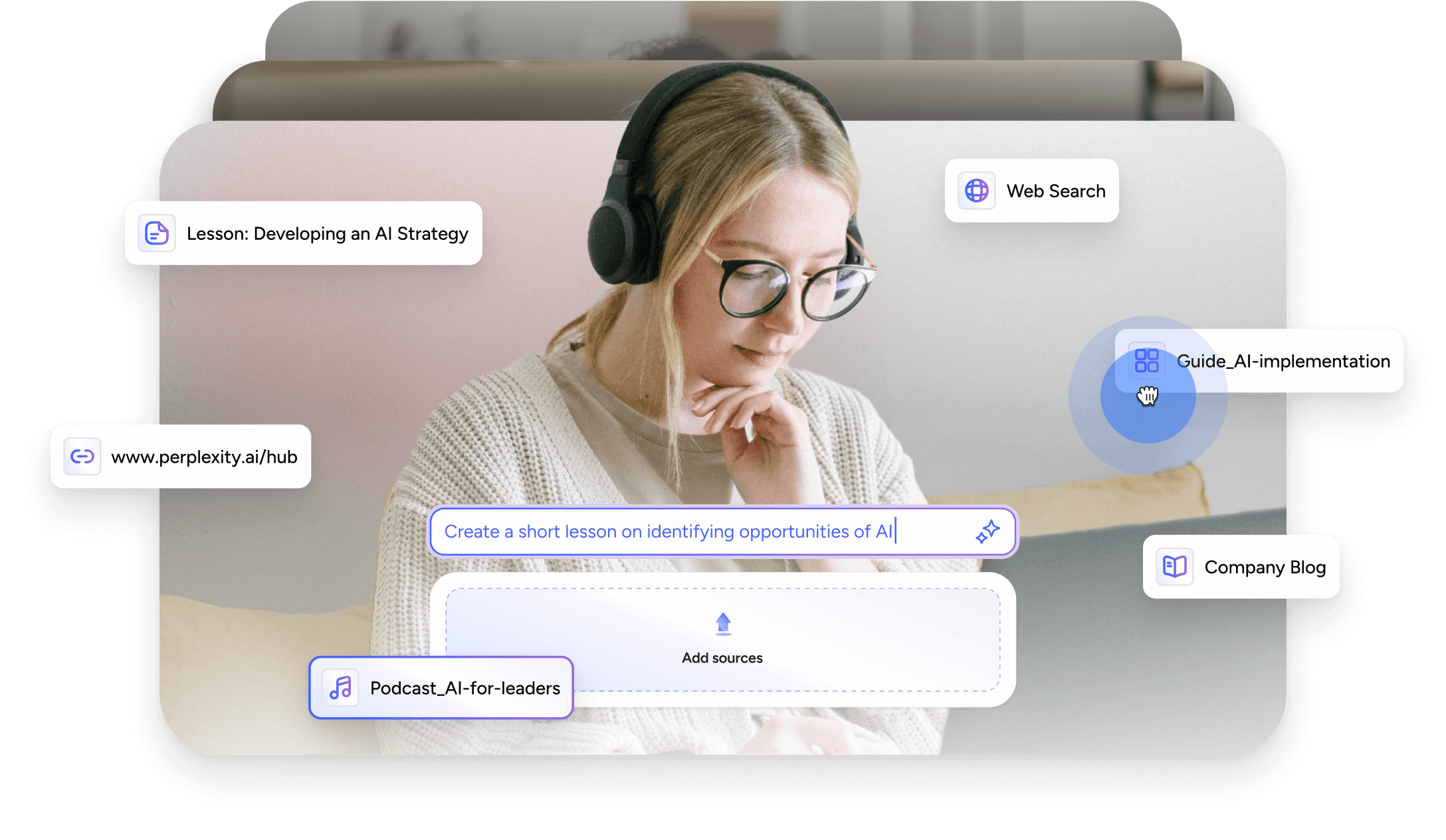

Exploring Disco AI's capabilities, we find a versatile AI tool that drafts lessons, crafts course names, generates content and images and answers user questions using data. This allows instructional designers to amplify their expertise, save time, and focus on crafting superior learning experiences.

What is AI in Education?

In simplest terms, Artificial Intelligence (AI) in education encompasses machines simulating human intelligence processes like learning and reasoning. This goes beyond traditional educational tools, extending to areas like accessibility and personalized learning. From narrow AI, designed to perform specific tasks, to general AI, capable of performing any intellectual task a human can do, AI is gradually becoming an integral part of our educational institutions.

It’s like having a digital co-instructor capable of providing personalized educational experiences, engaging learners, and fostering a dynamic, interactive learning environment.

➡️ We wrote a letter about the learning revolution in 2024. Read what we think about the future of learning with AI.

How does AI-Driven Curriculum Design Work?

But how does AI-driven curriculum design work? It’s all about the perfect blend of technology and pedagogy. AI technologies like Disco AI and Consensus GPT assist educators in creating effective learning experiences.

These tools enhance course content, improving the quality and relevance of learning materials. It’s like having an intelligent tutor that adapts to the learner’s needs, continuously improving instructional design, and keeping learners engaged.

This not only aids in educational pursuits but also contributes to transforming education by making the course development process more efficient and learner-centric.

8 Innovative Use Cases of AI for Curriculum Design (2024)

#1. Train AI with a Knowledge Base to Enhance Productivity

By integrating AI with a custom knowledge base, educators can significantly boost the productivity of their curriculum design.

The process begins with feeding the AI system a wealth of information specific to the educational content and outcomes desired. The AI then processes this data, learning from the structure, content, and pedagogical approaches within the knowledge base.

As it learns, the AI can begin to suggest content enhancements, create new material that aligns with the established teaching goals, and even identify areas where the curriculum might be expanded or refined. This personalized approach ensures that the curriculum remains relevant and tailored to the unique context of the learning environment, providing a more effective and engaging experience for students.

At Disco, we allow instructional designers and educators to train Disco AI using their knowledge base to improve content creation and member management. ChatGPT does not power Disco AI and is a native AI, therefore it's better if you want to deliver high-quality educational content that is personalized to your learning community.

#2. Leverage AI's Data-Driven Insights to Draft Tailored Content

In the realm of education, data is power. AI leverages this power to the fullest. Using natural language processing (NLP) technologies, AI analyzes educational materials comprehensively, identifying key concepts, trends, and gaps in the curriculum.

This data-driven insight helps recommend updates in curricula, ensuring they match learning objectives and meet educational standards efficiently. It’s like having an intelligent assistant that provides insights to help educators refine curriculum design and teaching strategies, thus enhancing student learning.

How it works? Login to Disco with your account. If you don't have yet, then sign up with our 14-day free trial.

To set up your learning community at Disco, you create learning products, upload content, and invite members. By doing so, you are feeding the platform with your knowledge base that could be transformed later on with AI suggestions such as prompts, responses to members, messages, etc.

Experience how Disco AI generates a whole new curriculum in minutes:

#3. Use AI to Streamline Course Creation Process

Educators wear many hats - they are not just teachers, but also administrators, planners, and guides. AI tools designed for lesson planning help educators by taking over administrative tasks, allowing them to dedicate more time to effective teaching.

AI is like a digital co-instructor, managing routine educational tasks and empowering educators to devote more energy and time to direct teaching and interacting with students. To ensure you are leveraging AI in curriculum design, here's how to create a learning product:

- Select "Products" and click the “+” icon to start. Choose from the available templates and set the access level: public, members only, or invite only.

- After selecting a template, a variety of pre-formatted elements will be available for customization, ranging from drag-and-drop blocks to different content types. For example, a self-paced course template includes a curriculum section where modules can be organized. Add various content types by clicking the “+” icon—text, videos, assignments, quizzes.

- Utilize AI to draft a lesson in seconds. While the AI composes your lesson, you can rearrange modules or add new ones.

Lastly, create an "app" to complement your course with additional features like events, social feeds, channels, and a knowledge library. Publish your course with ease, making it available for registration.

📺 Watch this short tutorial to master the process:

#4. Use AI in Producing Engaging Visual Content

AI is revolutionizing curriculum design by producing engaging visual content. Disco AI generates high-quality images (a video generator may come soon!), providing educators with a virtual graphic designer to create custom visuals that enhance learning. This capability enriches curricula, making complex subjects more accessible and engaging for students.

Our AI-generated images work seamlessly when you are on the Disco platform. It can generate visuals from logos, banners, course images, content images, and more.

Go to any of your learning products and click on the three dots in the upper right corner of your banner image. Choose "edit block" to generate an image for your banner.

Once clicked, you are asked to type in your prompt. After typing in your prompt, click "generate" and Disco AI will automatically generate the image for you in seconds.

I used it on my own Disco community's cover photo and it works so well with my superheroes theme!

#5. Use AI to Enhance Student Support and Learner Engagement

Learning is not a one-way street. It’s an interactive process that involves active participation from students. AI fosters this interaction by creating dynamic, interactive, and student-centered learning environments. By utilizing multimodal learning experiences, AI-driven educational tools support improved comprehension and engagement, catering to different student learning styles.

It’s like having a classroom that adapts to each student’s unique learning style, fostering innovation and critical thought. Enter Disco, the best learning community platform you can find on the internet. Disco is the only platform that combines LMS + Community Platform perfectly to each other, unifying all your content and databases in one place ensuring you have the seamless experience to manage your elearning business.

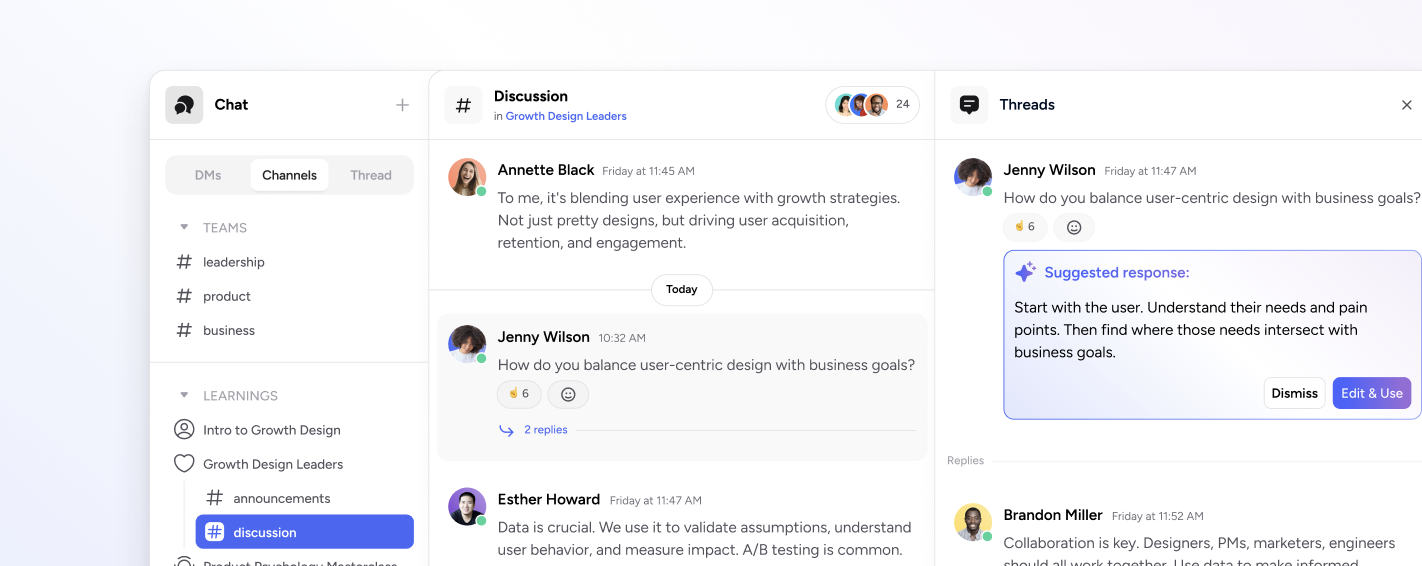

The platform offers a comprehensive suite of tools to foster an engaging educational environment, including social feeds, discussion boards, event management, and messaging.

Enhanced by Disco AI, educators are empowered to facilitate discussions with greater ease, as the AI suggests knowledgeable responses to learners' inquiries, writes posts and creates prompts. This elevates the overall educational experience, ensuring only transformative, quality learning.

#6. Use AI to Optimize Assessment and Feedback

Assessment and feedback play a crucial role in the learning process. AI is revolutionizing this aspect of education by:

- Automating administrative tasks, such as grading assignments

- Allowing educators to provide timely feedback to students

- Facilitating efficient and unbiased evaluation processes using predetermined algorithms

It’s like having an unbiased judge, ensuring fair judgment of each student’s work and providing valuable insights into their learning progress through student performance data.

Automated Grading and Instant Feedback

Grading assignments can be a time-consuming task for educators. AI comes to the rescue by automating the grading process, allowing for fair evaluations, and delivering customized feedback pinpointing students’ strengths and weaknesses.

It’s like having a virtual assistant, freeing up educators’ time to engage more directly with their students through teaching and interaction. With Disco's learner progress tracker, you don't need to manually grade and analyze the performance of your learners, it already shows in one unified data analytics dashboard.

Predictive Analytics to Forecast Student Performance

The ability to forecast student performance can be a game-changer for educators. Predictive analytics in education uses historical data and machine learning to forecast student outcomes, aiding educators in tailoring teaching strategies.

It’s like having a crystal ball, enabling educators to pinpoint struggling students and provide earlier, targeted interventions, leading to improved student outcomes. Leverage Disco's leaderboards to gamify your courses, and easily set up and customize each student's performance data using the engagement scoring system.

📺 Watch this tutorial to customize engagement analytics:

#7. Use AI to Utilize Personalized Learning Paths

AI is revolutionizing education with personalized learning paths. Machine learning algorithms analyze data to understand individual learning patterns, enabling the creation of custom-tailored content that adapts to each student's pace and style.

This approach provides a unique, engaging educational experience akin to having a personal tutor for every learner, ensuring active participation and a deeper understanding of the material.

Real-Time Adaptation to Student's Progress

Just as a skilled teacher observes and adapts to student progress, AI-powered adaptive learning platforms offer the following benefits:

- They adjust lesson difficulty and content based on individual student progress.

- They track students’ achievements and areas for improvement in real time, fostering immediate feedback and tailored instructions.

- They act as a constant monitor of student’s performance, swiftly updating curricula and suggesting evidence-backed strategies to address learning deficits.

This ensures students are consistently challenged at an appropriate level, promoting a more engaging and personalized learning process.

#8. Use AI to Identify and Bridge Knowledge Gaps

One of the most critical aspects of learning is to identify knowledge gaps and fill them. It’s here that AI plays a pivotal role. In a collaborative environment, AI can identify gaps in students’ understanding and suggest accurate, current information to fill those gaps during group discussions.

This aspect of AI in curriculum development contributes significantly to creating comprehensive learning materials that cater to students’ unique learning needs and foster a deeper understanding of complex concepts.

Leveraging AI to Assess Prior Knowledge

Before embarking on a new learning journey, it’s essential to understand where we stand. AI plays a critical role here, employing interactive techniques to determine students’ existing knowledge levels. From directly assessing existing knowledge and skills to delivering personalized support based on a student’s learning style and pre-existing knowledge, AI provides a foundation for accurate and effective teaching strategies.

It’s like having a tool that knows precisely what students know, facilitating the precise targeting of knowledge gaps and ensuring a personalized educational approach.

The Collaborative Future of AI and Education

The future of education is not just about AI; it’s about the collaboration between AI and human educators. AI has been instrumental in bridging the gap between learning designers and Subject Matter Experts (SMEs), acting as an intermediary that enhances collaboration.

It’s like having a digital bridge, connecting the knowledge of SMEs with the expertise of learning designers and paving the way for a collaborative future of AI and education.

Are you using the ADDIE Model? We've written a case study on how to elevate your ADDIE process using AI.

From understanding AI’s role in curriculum development to exploring its potential in crafting personalized learning pathways, identifying and bridging knowledge gaps, streamlining course creation, enhancing student learning, optimizing assessment and feedback, empowering educators, fostering critical thinking, and preparing students for future challenges, we’ve covered a lot of ground.