Medium length hero heading goes here

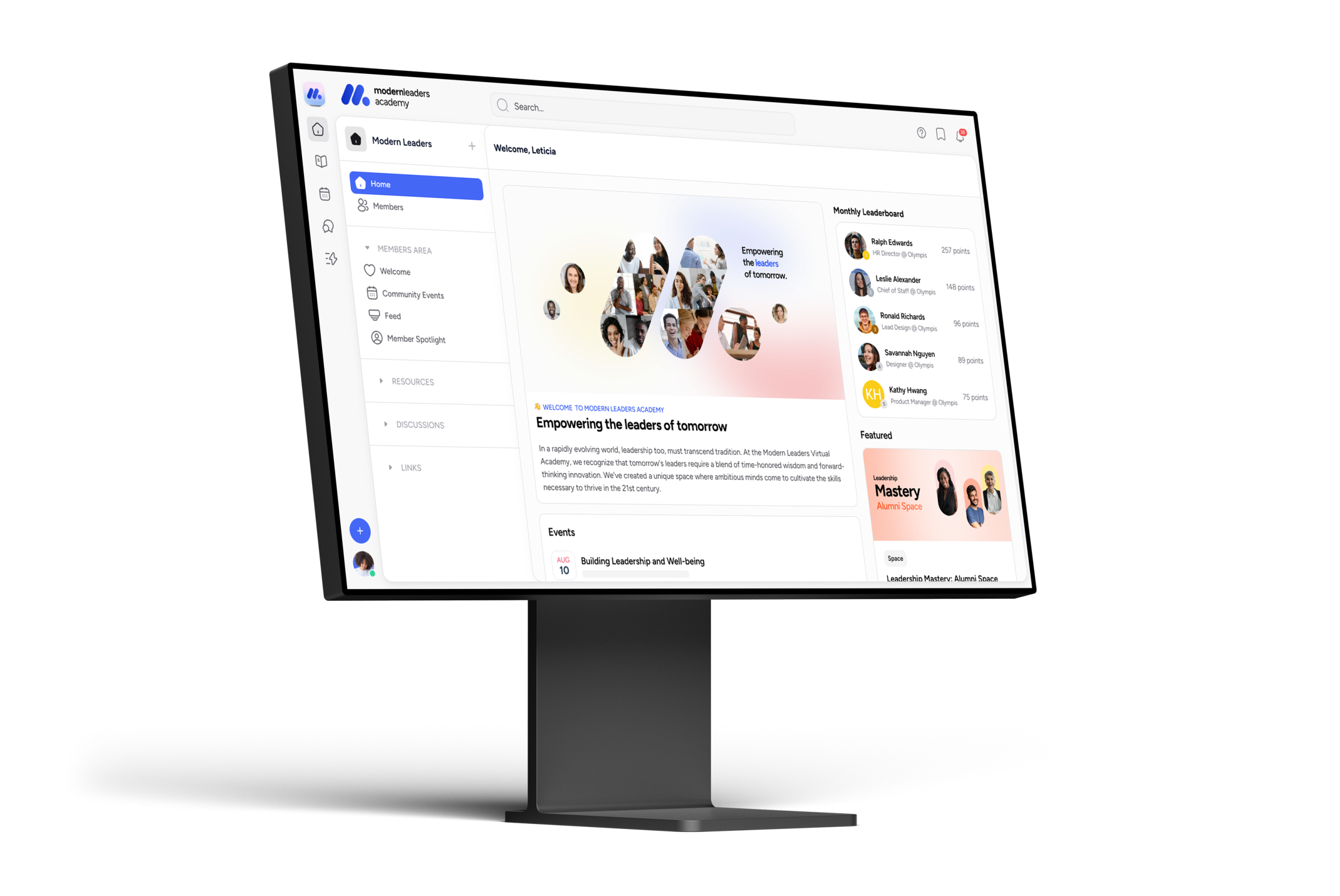

Build, power and scale learning faster with Disco AI

Better engage your learners with their own AI assistant and save your admin team 10X the time by drafting entire courses and community engagement with AI.

Create learning content 10x faster

Build programs with a prompt

Produce learning content faster than before – covering curriculum, lessons, quizzes, modules, assignments, and more.

Write posts and lessons with AI

Wave goodbye to writer’s block with Disco AI, the smartest learning design assistant at your service.

Generate images

Transform your online courses into interactive masterpieces with AI-generated images.

Generate video transcripts & summaries

Automatically transcribe and summarize videos and live events to offer your learners accessible learning content.

Supercharge member engagement

Answer member questions

Ask AI is an assistant trained on your own learning content. Members simply ask a question and get an instant answer (with sources) on any topic.

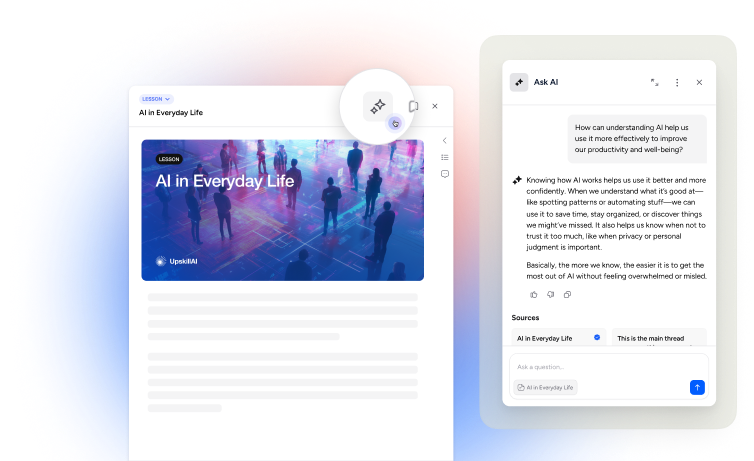

Generative questions in lessons

Encourage deeper learning by enabling generative questions within Lessons. A learner simply clicks the question and Ask AI gives the answer.

Smart engagement nudges

Ignite vibrant discussions with Disco AI’s intelligent prompt suggestions, customized to drive engagement based on member activity.

Scale operations with AI

Save time with suggested replies

Fuel dynamic discussions with smart suggestions, ensuring no member queries are left unanswered.

Control your training data

Enhance productivity by training Disco AI with your knowledge base, empowering it to accelerate your workflow, ultimately boosting your efficiency.

Fine tune your voice

Craft a thoughtful and well-designed member experience by tailoring your voice to reflect your brand’s character, fostering authentic and meaningful interactions.

_HighPerformer_HighPerformer.png)

_EasiestToDoBusinessWith_EaseOfDoingBusinessWith.png)

_Leader_Small-Business_Leader.png)

Name Surname

Position, Company name

Name Surname

Position, Company name

Name Surname

Position, Company name

Name Surname

Position, Company name

Name Surname

Position, Company name

Name Surname

Position, Company name

AI-first social learning is the learning of the future.

Overwhelmingly, we believe AI is a gamechanger for social learning and community operators. We are so excited about the promise this new technology holds for scaling personalized learning and transformational learning.

It is undeniable that the learning operators that embrace the opportunity for AI to reduce cost and resources and assist in creating transformational learning products that deliver real outcomes will be the learning operations that succeed in the future.

We are excited to build this AI enabled learning and community future with you.

Candice Faktor

Co-Founder, Disco

Launch your premium academy

Start building your branded academy with AI-powered tools that make it effortless to turn your expertise into transformative learning experiences.